Digital Twin Visual Robot Manipulator

A digital twin for a robotic manipulator using Visual Language Action Models

Abstract

This report details the development of a digital twin robotic manipulator built on a ROS framework. The robot has vision capabilities for various tasks, integrating hardware components such as an Nvidia Jetson Orin Nano, serial bus servo motor drivers, and 12V 30 kg.cm servo motors, the system leverages advanced computer vision algorithms and control strategies to achieve robust adaptation to environments. The system also includes a control panel, an IPad device, that can be used to control and generate tasks for the robot manipulator. The combined research and practical implementation confirm the robot’s efficiency in adapting to the environment and handling complex tasks.

Introduction

Vision Language Action models is shaping modern robotic systems, particularly in applications that requires visual feedback, fast and acccurate movement, and multi-task handling. Traditional methods are still dominant for specialized tasks due to accurate and precise nature of operation. This project explores the use of Vision Models on simple pick and place tasks and building up on the results to achieve satisfactory results on more complex problems. A camera is used for depth estimation, how far the end effector is from the object of concern. The high-level computation, mainly the computer vision tasks, is carried out on a Nvidia Jeston Orin Nanoز

Hardware Setup

The robot features six 12v servo motors providing 6 Degree of Freedom (DoF). The robot design was 3D printed. Key components include:

- Processing: Nvidia Jetson Orin Nano running ROS2 for high-level control.

- Control: Serial Bus Servo Motor Drivers managing six 12V servo motors.

- Sensors: Raspberry Pi HQ camera for 3D vision

- Display and Command: IPad Air 1 as the command and control device (any device can work)

Simulation and Software Setup

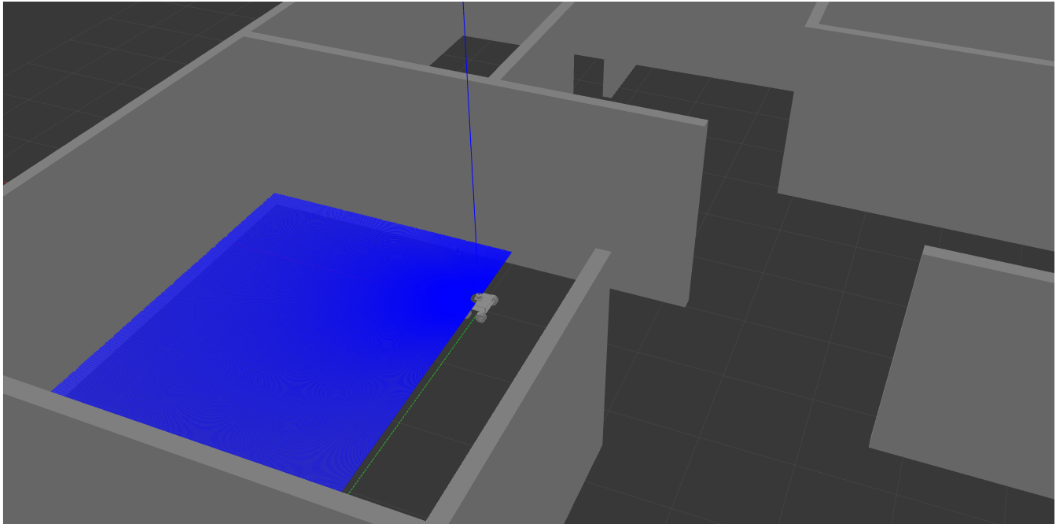

The robot was modeled in Fusion360 and converted to URDF using Fusion2URDF.

Gazebo plugins for differential drive, odometry, and LaserScan were integrated to simulate the robot in a custom environment.

The software stack includes ROS packages such as move_base, gmapping, and RTAB-Map, which work in tandem with sensor inputs to generate accurate maps and enable autonomous navigation.

Installation involves cloning the repository, building the workspace with catkin_make, and resolving dependencies with rosdep.

Methodology

Our approach is twofold:

- Simulation Model: A detailed CAD model was developed and tested in Gazebo. The navigation stack utilizes move_base and SLAM algorithms to continuously update the map and compute optimal paths.

- Hardware Integration: The robot fuses data from LIDAR, Kinect, and wheel encoders to improve localization accuracy. An Intel RealSense T265 Tracking Camera further refines the odometry, enabling robust real-world navigation.

Results and Discussion

Simulation tests in Gazebo validated the system's ability to generate both 2D and 3D maps. In real-world trials, the robot effectively navigated dynamic outdoor environments, demonstrating reliable obstacle avoidance and accurate localization. The combination of GMapping for rapid 2D mapping and RTAB-Map for richer 3D spatial awareness provides a comprehensive solution for autonomous navigation in GPS-restricted areas.

Conclusion

The 4WD Outdoor Navigation Robot integrates advanced hardware and software to achieve robust autonomous navigation. By combining detailed simulation with rigorous hardware integration, the robot reliably maps its environment and navigates complex terrains. Future work will focus on optimizing sensor fusion, reducing computational overhead, and extending the operational range to further enhance system performance.